On November 6th the Compassion Through Algorithms Vol. II compilation was released, raising money for Young Minds Together . The compilation is still available, and of course you can donate directly to Young Minds Together if you prefer.

In this blog post I’ll be going over how I made my track, Pulse.

I’m two years into making music and I’ve recently become more comfortable and confident in my processes. I’ve gotten over the technological hurdles and, having experimented in making music/sounds of different styles both in private and at Algoraves, I feel I’ve found a range of styles that I like making music in. In the live coding music world some of my biggest influences have been eye measure, Miri Kat, Yaxu, and Heavy Lifting. Their work spans many genres but what I’m drawn to in their music is the more sparse, ambient and even sometimes aggressive sounds. I tried to keep this in mind when making Pulse.

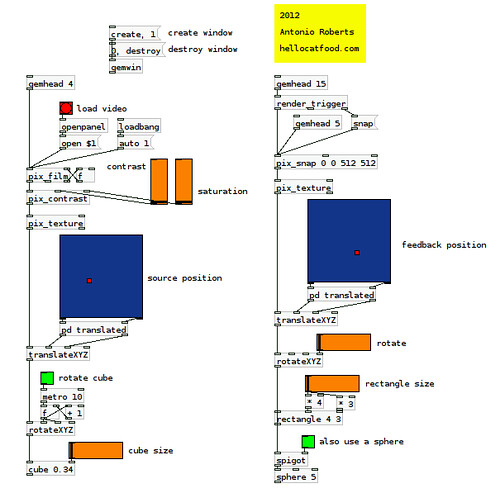

As with most things I make I started first by just experimenting. I can’t fully remember my thought process but at some point I landed on turning a kick drum (“bd” in Tidal) sound from a percussive to a pitched instrument. I achieved this by triggering the sample many times in quick succession and playing with the speed in which it was played back.

setcps (135/60/4) d1 $ sound "bd*4" d7 $ loopAt "0.75 0.25" $ chunk 4 (slow "2 4") $ sound "bd*<32 16> kick*32" # speed (fast "0.25 1" $ range 4 "<0.25 0.75 0.5>" $ saw) # accelerate "3 2 0" # gain 0.75 # pan (slow 1.2 $ sine)

I like the piercing buzzing nature of the sound and so decided to focus on building the track around this. Next I had to get the tempo right. By default Tidal runs at 135 bpm (0.5625 cps). Running that code at 135 bpm felt way too fast and so I tried bringing it down to 99 bpm.

It’s no longer at a speed to dance to but makes for better listening. It also meant I could more accurately identify what note the buzzing sound was at. The loopAt command affects the pitch of the samples and it is itself affected by the tempo that Tidal is running at, so setting it at 99 bpm (setcps (135/60/4)) revealed that the buzzing sound was at a G-sharp. It’s probably still a little bit out of tune but it’s close enough!

In late August I bought + was given the Volca Bass and the Volca FM synths. By this time I had been using bass samples in this track but saw this as an opportunity to give these newly acquired synths a try! The Tidal website has instructions on setting up midi, which worked well. One issue was that I was using two of the same usb-to-midi adaptors. On the surface this isn’t an issue, but, at least according to the midi Tidal instructions, when adding a midi device it does so by name and not by any sort of unique ID. Running MidiClient.init: with both adaptors connected gave me this:

MIDIEndPoint("USB MIDI Interface", "USB MIDI Interface MIDI 1")

MIDIEndPoint("USB MIDI Interface", "USB MIDI Interface MIDI 1")

I didn’t know which of the two adaptors Tidal was going to send midi messages to and so no idea which synth would be triggered! Fortunately Alex McLean was on hand to provide a (linux-specific) solution. The dummy Midi Through Port-0 port exists by default and so Alex suggested adding another one. I’ll quote from Alex from the Toplat chat:

if you add options snd-seq-dummy ports=2 (or more) to /etc/modprobe.d/alsa-base.conf

you’ll get two of them

the other being

Midi Through Port-1

obvs

then you can tell supercollider/superdirt to connect to them

then go into qjackctl and the alsa tab under ‘connect’ to connect from the midi through ports to the hardware ports you want

then you can make them connect automatically with the qjackctl patchbay or session thingie

I like doing it this way because it means I can just start supercollider+superdirt then change round which midi device I’m using super easily.. plugging/unplugging without having to restart superdirt

I don’t know if this will solve the problem of having two devices with the same name but hopefully..

With that all fixed I recorded my track! Here’s a live recording of me, um, recording it. It is made using Tidal, the code is just on a screen out of shot.

As you may have noticed there’s some latency on the Volca bass. I should have adjusted the latency in Jack to account for this but at the time didn’t realise that I could do this or even how to do it. However, I was recording the Volca Bass and FM onto separate tracks in Ardour so I was able to compensate for the latency afterwards.

On reflection I should have recorded each orbit (d1, d2 etc) into separate tracks. At the time I didn’t realise I could do this but it’s pretty simple withclear instructions located on the Tidal website, and there’s friendly people on the Toplap chat who helped me. This would allow me to do additional mixing once it was recorded (my Tidal stuff is typically way too loud). Aside from those observations I’m really happy with how it sounds! I’ve shared my code below, which may be useful to study but of course you’ll need Volca’s/midi devices to fully reproduce it.

setcps (99/60/4) d1 -- volca fm $ off 0.25 ((fast "2") . (|+ note "12 7")) $ note "gs4'maj'7 ~" # s "midi1" d6 $ stack [ sound "kick:12(5,8) kick:12(3,<8 4>)", sound "sd:2", stutWith 2 (1/8) ((fast 2) . (# gain 0.75)) $ sound "hh9*4", sound "bd*16" # speed 2 # vowel "i" ] d4 -- volca bass $ fast 2 $ stutWith 2 (1/4) ((|+ note "24") . (slow 2)) $ note "~ ~ ~ gs2*2" # s "midi2" d7 $ loopAt "0.75 0.25" $ chunk 4 (slow "2 4") $ sound "bd*<32 16> kick*32" # speed (fast "0.25 1" $ range 4 "<0.25 0.75 0.5>" $ saw) # accelerate "3 2 0" # gain 0.75 # pan (slow 1.2 $ sine) d2 -- transpose volca fm $ segment 32 $ ccv 50 $ ccv (range 10 (irand 10+60) $ slow "8 3 7 3 1" $ sine ) # ccn "40" # s "midi1"

If you enjoyed my track or any of the others on the compilation please consider buying the compilation or making a donation to Young Minds Together and help the fight against racial injustice.